Anand Krishnaswamy

In an article published earlier in this magazine (https://www.teacherplus.org/making-edtech-an-ally/), I had recommended that we “Make measurement and analysis of measurement a vital function of running the school”. Very few will disagree with the rationale behind this, but will often be flustered when asked to provide steps for implementation. I think it is vital that schools be supported on making measurement and the analysis of measurement, a vital function. There are multiple aspects that need support – what to measure, how to measure, how to record, how to analyzes, and most importantly, how to respond (which in itself is composed of many facets). While it might seem arduous or contrived to do all this “rather than teach” one must not forget that teaching is responding to the learner’s state of being to ensure further development of cognitive, socio-emotional, and psychomotor skills.

Consider a common scenario of two students who scored the exact same final score of 79 out of 100 in an exam. That is one kind of data. Ask the teacher – what does each need to get their score to 90? To 100? and if she has no answer other than “S/He must study harder/better” or some version of “S/He should be more disciplined” then you know that a huge amount of valuable insight is not being extracted from the large amount of data that an exam performance provides us. And this is merely one exam of three hours from the whole year!

Imagine any dialogue, within the confines of a school, swiftly making a demand for data to establish the basis of statements being made and to challenge the model being employed to draw conclusions! Imagine the same dialogue being held between teacher and student and the student demanding to see the data and verify the model being used by the teacher! Rather than receiving a cryptic 79, what if a student asked – (a) What are my strengths? (b) What topics or forms of questions am I struggling with? (c) Are there holes in my strategy? (d) What are the data backing your responses? (e) What is the one thing, if changed, would improve my performance significantly? This would push teachers, school leaders to look at their work differently. Most schools simply classify their summative assessments across marks – 1-mark, 2-marks, 3-marks and 5-marks questions. What is the performance per chapter? Per domain or thread? What about performance against cognitive skills? This is where schools need to head to. This is when they hold data as part of their culture.

Back in 2018 when I was heading academics at Purkal Youth Development Society (PYDS), we changed the question paper format – (a) centrally and anonymously generated and (b) scoring by skills and thread/topics. The insights it provided helped identify individual student remedial strategies as well as teacher professional development needs.

As mentioned above, there are multiple aspects that need support and hence, elucidation. Going into each, along the various dimensions, is a tome in itself. I will restrict this article to understanding two of them and aim to provide an outline of how schools can take a step or two in the right direction. I also believe that these are vital to instilling a data-driven mindset in the school.

Most importantly, data are required to help us be better in schooling and educating. The intention is betterment; data analysis is a tool. One shouldn’t confuse one for the other. Before I proceed, I would like to caution the enthusiastic reader:

a) Start small and scale only when results of analysis have been verified for 2-3 cycles and all stakeholders are convinced.

b) Do not hoard without use.

Formulation of hypothesis

Before any data are collected, one needs to be clear what aspects we are exploring and/or seeking to improve. I will leave pure action research out of this discussion (I would heartily recommend Action Research, Principles and Practice by Jean McNiff for a deep study). In short – What is the question we are asking ourselves for which data can help answer? It could be “Would providing pointers to improvement vs scores on tests improve performance?” or “Does creating pay scales democratically and transparently result in reduced attrition?” or any of the many questions that plague schools. It could also be a proven metric – e.g., absenteeism – that one decides to track.

Once the question or the metric is in place, there must be a joint conversation between school leaders, teachers, students, and parents to ensure that everyone is on the same page and is convinced about the methodology. It also gives everyone a chance to challenge or lend perspective to the particular data being correlated with an outcome. For example, speaking up in class might not necessarily correspond to understanding or at least the converse – not speaking up needn’t imply lack of understanding. Some students might prefer to demonstrate their comprehension via different modes. Some teachers, perhaps, might have no clue why parental income or the nature of parental employment affects learning and the discussion helped.

Once everyone is on the same page, then we can afford to get creative on admissible/permissible evidence and data. Students might come up with relevant means to prove command over a particular objective. This increases authenticity of assessment and responses.

Students and teachers can be sensitized to observe so as to challenge incorrect conclusions. For instance, if every student and teacher on a school bus is aware of the question (say: Does changing bus drivers across bus routes decrease accidents?) then they might be able to clarify that a particular incident was not due to a change of driver and could have happened no matter who the driver was.

Data are required at various levels for various purposes. One needs data about student learning, pedagogical efficacy, while conducting any action research, optimizing resources, feedback data, etc. To attempt to collect all at the outset is a recipe for disaster. Discuss, debate and identify your top hypotheses (and, thus, the data points to validate or invalidate said hypotheses) or indicators of student learning, teacher well-being, etc. At the outset when data isn’t one’s cultural fabric, it makes sense to start with fewer indicators, see the value in them, identify ways of collecting them and responding to the same and be largely convinced of the utility of tracking these indicators before moving on to collect more and reap the benefits.

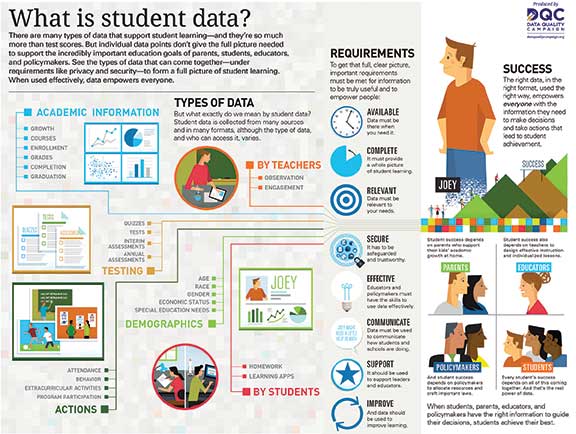

While data requirements to aid decision making varies, student data that is required, often across most questions impacting learning, includes (a) demographic information (usually obtained as part of the admission form) (b) academic information (c) assessment information (d) teacher observational info (also backed by data) (e) student performance (usually non-cognitive) and (f) student participation. A useful infographic about student data as designed by DQC (Data Quality Campaign) is shown below:

Data literacy

Once we have our question on the table, the most important skill that every stakeholder needs to possess is that of data literacy. If one doesn’t understand what is collected, what else is relevant, dependency, correlation and causation, etc., then merely asking the question is wishful thinking.

While “Data Handling” is a common thread of chapters in CBSE mathematics, what is required is a skill beyond just the mathematics of it. The ability to see how rounding off of a numerical value can skew outcomes or unnatural categorization/grouping can completely tell a different story is a skill that needs development across subjects and outside of subjects as well. Critical thinking skills are also developed in the process. Surfacing bias in data collection or analyses is something that would be possible with this skill, and thus eliminate notions of partiality.

There is immense scope for developing metacognitive skills as well. When a student who is being assessed for, say, mathematical skills is able to look at the content and ask “What truly would be a demonstration of mastery of this content?” they will be able to contribute to the conversation around whether, say, a summative assessment score is sufficient indicator of mastery. Including students in the data collection and analyses processes creates the space for ownership and brings about transparency. Since each student has access to all the data collected about himself/herself, s/he has the opportunity to ask or rephrase questions that would help him/her to succeed. This is lost when data are kept away from students (usually under the suspicion that they will manipulate the data for favourable projections). These real-world data analyses exercises also help bring this topic to life because it is very relevant/personal.

For teachers, data literacy must be a mandatory professional development programme. Sadly, even the latest B.Ed. curriculum doesn’t have a module on data literacy. It is a distant future when data about TPDs will be collected and analyzed! Teaching every teacher about the goldmine of information hidden in their assessment and interactions with students is important. Forcing them to back their hunches and conviction with data will push them to get better. This will help them respond better to the needs of the student cohort they are dealing with as well as present their cases better with school management. Making it a joint exercise with other teachers helps surface issues like “K’s challenge is not the mathematics in word problems but the language in word problems”.

School leaders too need to be adept at this skill especially for key decisions. I have known many a principal who would rate a teacher based on hunches and a few clever readings of their performance in class but barely ever sat in one of their classes! Again, at PYDS, I had asked teachers whose feedback should have the highest weightage and how many minimum observations lend credibility to a performance summary? We finally agreed on numbers thereby forcing out instances of impressionistic judgment and favouritism. They rejoiced in this turn of process till the same was asked of students! When students shared instances of how teachers made random judgments about them without ever hearing them out or even investing in understanding their challenges, the process for students changed too necessitating more data-driven and intentional feedback that a student got to review. Teachers had to collect periodic feedback from all of their students to get an idea of trends of concerns.

Schools must take an extra effort in including parents in this initiative by playing the role of coach and advocate for the parents. Making an exploration or conclusion explainable to the parents is a vital responsibility of the school. To challenge on their behalf is one step towards gaining their trust and ensuring that data is at the centre of all conversations. This helps the conversation between parent and child as well. PTMs would be a lot more meaningful when parents come in with an increased understanding of methodology and data collected.

To summarize, there are many steps to being a data-driven institution. Some steps also require improvement in processes involving the governmental agents (either that they begin collecting some info or share better). However, there is enough work that needn’t involve government agencies and yet provide immense value to the student, teacher, school leader, and community. The increased transparency supports greater community participation and can even make access to funds easier. With periodically published indicators, etc., schools increase transparency and stick to their commitment of constantly improving. Students, with access to data pertaining to their development, are likely to be more informed and invested in their development. Teachers too benefit from the increased transparency and might, hence, be driven to be more committed to the organization and its educational goals.

I would treat the aforementioned two facets as key as they are fundamental to any initiative we might take. Without universal data literacy and without a structured and democratic way of posing questions, schools are unlikely to improve in planned and predictable ways.

The author is a computer scientist-turned educator who has largely been working with schools for rural and/or underprivileged children. He has also contributed curricula design to the EdTech industry as well as training teachers and educational leaders, in situ. He can be reached at anand.krishnaswamy@gmail.com.

Related Article