Sudipto Bhattacharya and Subha Das Mollick

The first programming languages predate the modern computer. In 300 BC, Euclid wrote an algorithm to compute the HCF of two numbers. There are also examples of Latin verses that are basically algorithms. In the 8th Century AD, Abu Jafar Muhammad Ibn Musa, the court mathematician of Baghdad, wrote two books – one on algebra and the other on algorithms. The book on algorithms has compact procedures for solving different algebraic equations. Thus we see that over the ages algorithms have been developed to meet complex computational challenges.

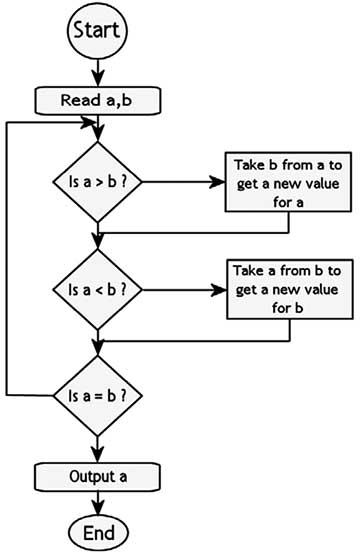

An algorithm is a self contained sequence of actions for computation, data processing or automated reasoning tasks. All computer programs follow algorithms. In ancient times algorithms were often codified in verse, using natural language. Today, Euclid’s algorithm for finding the HCF of two numbers would look something like this:

The algorithm proceeds by successive subtractions in two loops: IF the test B ≥ A yields “yes” (or true) (more accurately the number b in location B is greater than or equal to the number a in location A) THEN, the algorithm specifies B <--- B−A (meaning the number b−a replaces the old b). Similarly, IF A > B, THEN A <--- A−B. The process terminates when (the contents of) B is 0, yielding the HCF in A. Algorithms constitute repetition of a procedure till a desired result is obtained. Every node of the algorithm is branched into two options – either the end result is obtained or the procedure is repeated. Students may be asked to write algorithms for arranging a set of numbers in ascending or descending order, an algorithm for making a sub set of multiples of 3 from a given set of numbers and many more simple operations. In the 18th century, Gottfried Wilhelm Leibniz, the German mathematician who had developed ‘calculus’ independent of Newton, invented the mechanical calculator that could perform all four arithmetic operations. He also invented the binary number system and had predicted that “human reasoning can be reduced to a formal symbolic language, in which all arguments would be settled by mechanical manipulation of logical concepts”. This was perhaps the first ideation of a machine carrying out programmed operations. The first step for writing a program is developing an algorithm.

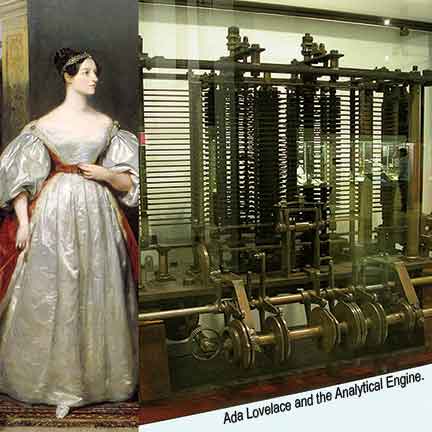

In the 19th Century, Ada Lovelace, daughter of the poet Lord Byron, wrote an algorithm for calculating Bernoulli’s numbers. These numbers are a sequence of rational numbers arising out of operations on powers of integers. Ada Lovelace’s algorithm is recognized by some historians as the world’s first computer programme. She had written it for Charles Babbage’s Analytical Engine – a machine envisaged by Babbage for carrying out complex mathematical computations. She wrote detailed notes on how the machine could be made to handle letters, symbols and numbers.

In the 19th Century, Ada Lovelace, daughter of the poet Lord Byron, wrote an algorithm for calculating Bernoulli’s numbers. These numbers are a sequence of rational numbers arising out of operations on powers of integers. Ada Lovelace’s algorithm is recognized by some historians as the world’s first computer programme. She had written it for Charles Babbage’s Analytical Engine – a machine envisaged by Babbage for carrying out complex mathematical computations. She wrote detailed notes on how the machine could be made to handle letters, symbols and numbers.

Meeting machine challenges

But how would the data be fed into the engine? The most viable option seemed to be punched cards, of the type used in Jacquard’s loom.

In 1896 Hollerith founded ‘Tabulating Machine Company’. In 1906, Hollerith improved the design of his tabulating machine. He introduced the automatic card feed mechanism and the key punch. This simplified writing codes for different jobs. Hollerith’s company was later merged with other corporations and in 1924 became International Business Machines Corporations. IBM continued to use punched cards all the way into the 70s. The cards had 80 columns and 12 rows. That is the origin of the 80 character limit followed even today.

Coding to programming

Grace Murray Hopper once said, “We were not programmers in those days. The word had not yet come over from England. We were “coders.” If one takes a look at the manual of Mark 1, the IBM automatic sequence controlled computer, it is a mindboggling list of codes. Programming had always been tedious and labour intensive.

The question is, how and when these programming codes begin to take the form of a language. To some people the answer depends on how much power and human-readability is required before the status of “programming language” is granted.

A programming language describes computation on some abstract machine. Natural languages, such as English, are ambiguous, fuzzily structured and have large (and changing) vocabularies. Computers have no common sense, so computer languages must be precise – they have few, defined rules for composition of programs, and strictly controlled vocabularies in which unknown words must be defined before they can be used.

Computers may work differently, but virtually all computers understand only binary code, that is, a series of 0s and 1s. It correlates well with electronic switching: off=0, and on=1. At the beginning, in order to program a computer one had to speak its language, called machine language. That is, one had to work with 0s and 1s. Because this is tedious and error prone, computer scientists have tried hard to abstract this process to make it easier for people to make programs.

Assembly language is not machine code, but almost. It’s directly correlated to the underlying architecture, so there is no abstraction. To turn assembly language into machine code, an ‘assembler’ is used. The assembler is the first software tool ever invented.

In the 1940s the first recognizably modern, electrically powered computers were created. The limited speed and memory capacity forced programmers to write hand tuned assembly language programs. It was soon discovered that programming in assembly language required a great deal of intellectual effort and was error-prone.

In 1948, Konrad Zuse published a paper about his programming language Plankalkül. However, it was not implemented in his time and his original contributions were isolated from other developments.

In the 1950s, John Backus at IBM, developed Fortran – a high level abstract language meant for scientific calculations. Fortran is the short form of Formula Translation. It is still one of the most popular languages in the area of high-performance computing and is the language used for programs that benchmark and rank the world’s fastest supercomputers. Fortran established the convention of using the asterisk. Here is an example:

program hello

print *, “Hello World!”

end program hello

Here is another short program in FORTRAN for computing the area of a circle:

Program circle area

real r, area, pi

parameter (pi = 3.14159)

This program computes the area of a circle.

print *, “What is the radius?”

read *, r

area = pi * r ** 2

print *, “The area is”, area

print *, “Bye!”

end

COBOL (COmmon Business-Oriented Language) was designed for business use. It was an attempt to make programming languages more similar to English, so that both programmers and management could read it. Here is a sample of the shortest programme written in COBOL:

$ SET SOURCE FORMAT “FREE”

IDENTIFICATION DIVISION.

PROGRAM-ID. Shortest Program.

PROCEDURE DIVISION.

Display Prompt.

DISPLAY “I did it”.

STOP RUN.

BASIC (Beginner’s All-purpose Symbolic Instruction Code) was designed in 1964 by John G. Kemeny and Thomas E. Kurtz at Dartmouth College in New Hampshire.

BASIC was developed specifically for timesharing. It was a very stripped-down version of Fortran, to make it easier to program.

It came with a clever way of editing programs using line numbers, for both programming and other operations like ‘GOTO’ line jumping.

ALGOL 60 (ALGORITHMIC ALNGUAGE 1060) had a great influence on modern computer languages.

In addition to these, there have been languages like LISP, PASCAL, B and of course, C. C was developed by Dennis Ritchie at the Bell Labs between 1969 and 1973. C has been unbelievably successful and has inspired the following languages:

• C++ (1976)

• Objective-C (1986)

• Perl (1988)

• Java (1991)

• Python (1991)

• JavaScript (1995)

• PHP (1995)

• C# (1999)

• Go (2007)

Debates, consolidations, developments

The 1960s and 1970s saw considerable debate over the merits of “structured programming”, which essentially meant programming without the use of GOTO. This debate was closely related to language design: some languages did not include GOTO, which forced structured programming on the programmer. Although the debate raged hotly at the time, nearly all programmers now agree that, even in languages that provide GOTO, it is bad programming style to use it except in rare circumstances.

One important trend in language design was an increased focus on programming for large-scale systems through the use of modules, or large-scale organizational units of code. Modula, Ada, and ML all developed notable module systems in the 1980s. Module systems were often wedded to generic programming constructs – generics being, in essence, parameterized modules (see also polymorphism in object-oriented programming).

The 1990s saw no fundamental novelty, but much recombination as well as maturation of old ideas. A big driving philosophy was programmer productivity. Many “rapid application development” (RAD) languages emerged, which usually came with an IDE, garbage collection, and were descendants of older languages. All such languages were object-oriented.

New trends

In the world of technology, precision, rigour, science, logic and mathematics rule over fad and whims. Trends are dictated by greater efficiency, greater adaptability and increased customization. It wasn’t long ago that people who created a new programming language had to build everything that turned code into the bits fed to the silicon. Then someone figured out they could piggyback on the work that came before. Now people with a clever idea simply write a preprocessor that translates the new code into something old with a rich set of libraries and APIs.

Just as professional programmers are working round the clock to make search engines faster and more efficient, to make social networking platforms error free even in the face of heavy traffic, amateur coders are developing their own apps.

References

- https://www.programiz.com/c-programming/examples/hcf-gcd

- http://www.csis.ul.ie/cobol/examples/Accept/Shortest.htm

- http://www.math.hawaii.edu/~hile/fortran/fort1.htm

- http://www.infoworld.com/article/3188464/application-development/21-hot-programming-trends-and-21-going-cold.html

- https://foorious.com/articles/brief-history-of-programming-languages/

Further reading

- A concise presentation of history of programming: https://www.csee.umbc.edu/courses/331/fall08/0101/notes/02/02history.pdf

- Programmers at Work is a book of interviews of programmers taken by Susan Lammers, to understand their creative minds and their motivations. The book is out of print. In her blog (link given below), Lammers discusses the impact of the book. She has been uploading the interviews separately in her blog. https://programmersatwork.wordpress.com/tag/susan-lammers/

- A delightful rapid reader on how operating systems and other programmes became commodities to be sold in the market http://faculty.georgetown.edu/irvinem/theory/Stephenson-CommandLine-1999.pdf

- An engaging book on history of programming

Go To: The Story of the Math Majors, Bridge Players, Engineers, Chess Wizards, Maverick Scientists, and Iconoclasts – the Programmers Who Created the Software Revolution by Steve Lohr

Sudipto Bhattacharya is academic head, iLEAD Institute, Kolkata. He can be reached at sdipto@gmail.com

Subha Das Mollick is a media teacher and documentary filmmaker. She is also the secretary of Bichitra Pathshala. She may be reached at subha.dasmollick@gmail.com.